Abstract:

Abstract: We study how to apply large language models to write grounded and organized long-form articles from scratch, with comparable breadth and depth to Wikipedia pages. This underexplored problem poses new challenges at the pre-writing stage, including researching the topic and preparing an outline before writing. We propose STORM, a writing system for synthesizing Topic Outlines through Retrieval and Multi-perspective Question Asking. STORM models the pre-writing stage by (1) discovering diverse perspectives in researching the given topic, (2) simulating conversations where writers carrying different perspectives pose questions to a topic expert grounded on trusted Internet sources, and (3) curating the collected information to create an outline.

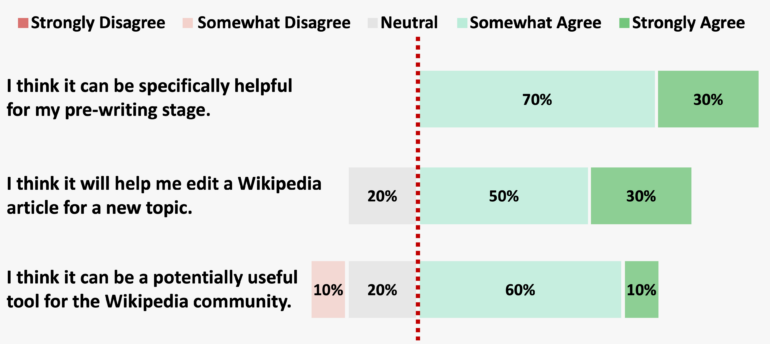

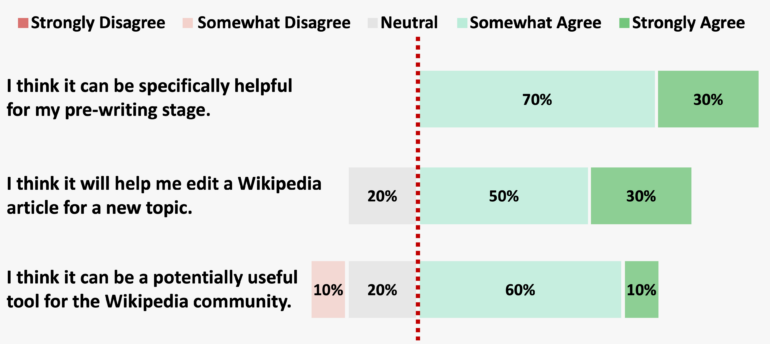

For evaluation, we curate Fresh Wiki, a dataset of recent high-quality Wikipedia articles, and formulate outline assessments to evaluate the pre-writing stage. We further gather feedback from experienced Wikipedia editors. Compared to articles generated by an outline-driven retrieval-augmented baseline, more of STORM’s articles are deemed to be organized (by a 25% absolute increase) and broad in coverage (by 10%). The expert feedback also helps identify new challenges for generating grounded long articles, such as source bias transfer and over-association of unrelated facts.

Introducing the Pre-writing Challenge

Much work needs to be done before actual generation can start.

Generating long articles with citations is hard to do and hard to evaluate. We break it down into two stages:

Pre-writing stage

Given a topic, the system collects references from a large corpus (e.g., the Internet) and generates an outline.

Writing stage: Given the topic and the collected references, the system generates a full-length article with citations.

We define metrics and collects the Fresh Wiki dataset to evaluate the pre-writing stage quality. Our results demonstrate a correlation between the pre-writing quality and the final article quality.

Finding Highlights

Automatic Evaluation: STORM outperforms strong retrieval-augmented generation baselines across all automatic metrics, including LM eval and metrics comparing with human-written articles.

Fast prototyping

Evaluating outline quality in the per-writing stage is an effective way to prototype the report generation system.

Expert Evaluation

In our human evaluation with experienced Wikipedia editors, all participants agreed that the system is helpful for their pre-writing stage.

Error Analysis

Through careful error analysis (see Appendix C & E), we find the major challenge stems from red herrings (establishing shaky links or incorporating irrelevant content) rather than widely discussed factual hallucination issue.

© 2024 Stanford STORM Project

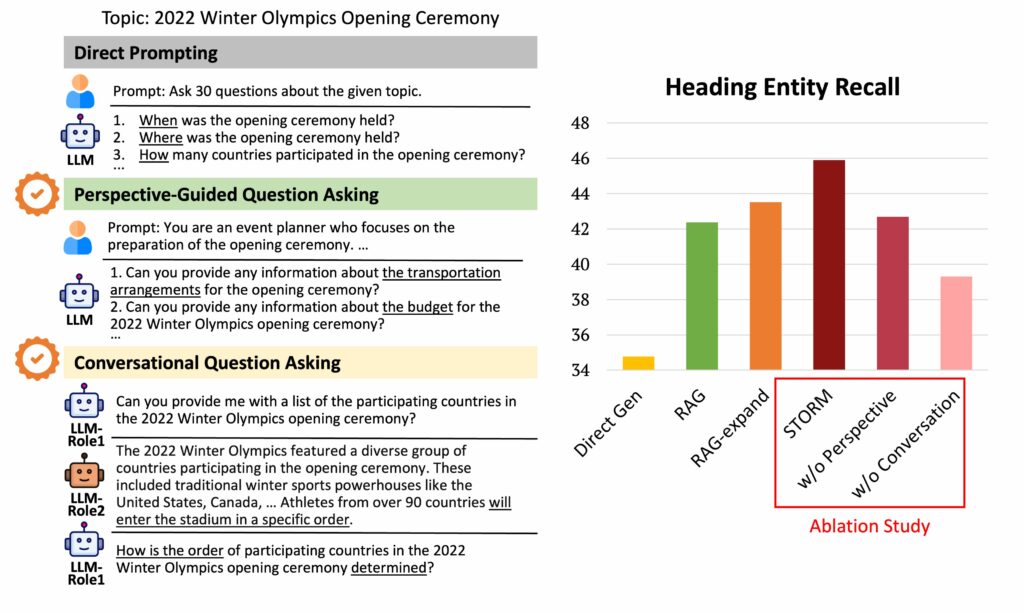

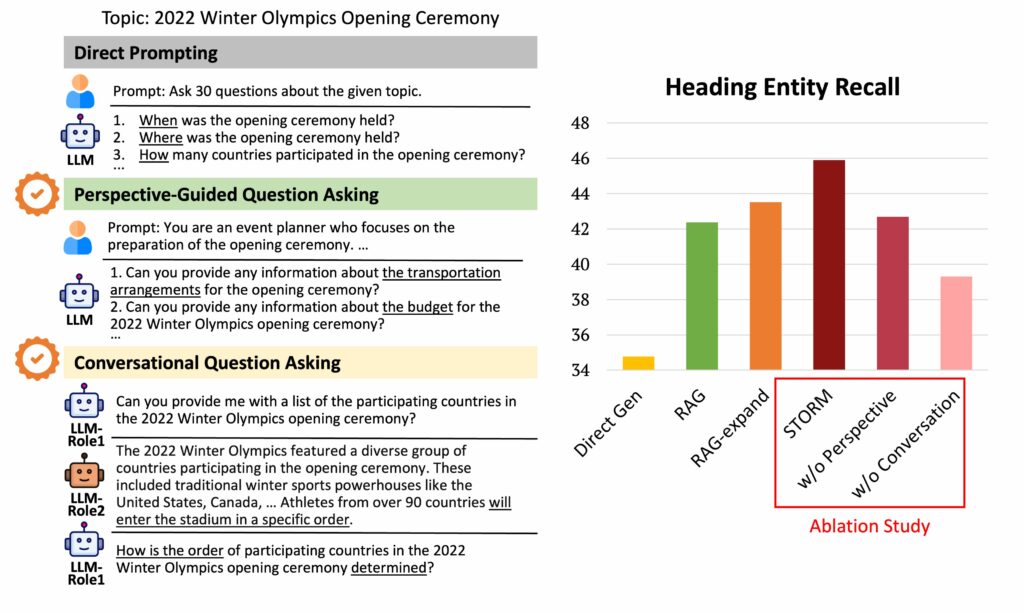

Direct prompting leads to superficial questions, especially for topics in the long tail. To improve the breadth and depth of LLM-generated questions, we propose two approaches:

Perspective-Guided Question Asking: Besides instructing the LLM to generate questions on a given topic, we include a specific perspective in the prompt to provide focus and prior knowledge. While users can specify perspectives, STORM can automatically mine diverse perspectives from relevant Wikipedia articles.

Simulating Conversations

Ram, 1991 argues that new questions often arise as answers to previous questions update a person’s understanding of a topic in the human learning process. We encourage the LLM to ask follow-up questions by simulating a conversation between it and a retrieval-augmented question-answering component. Our ablation study demonstrates the effectiveness of the proposed method.

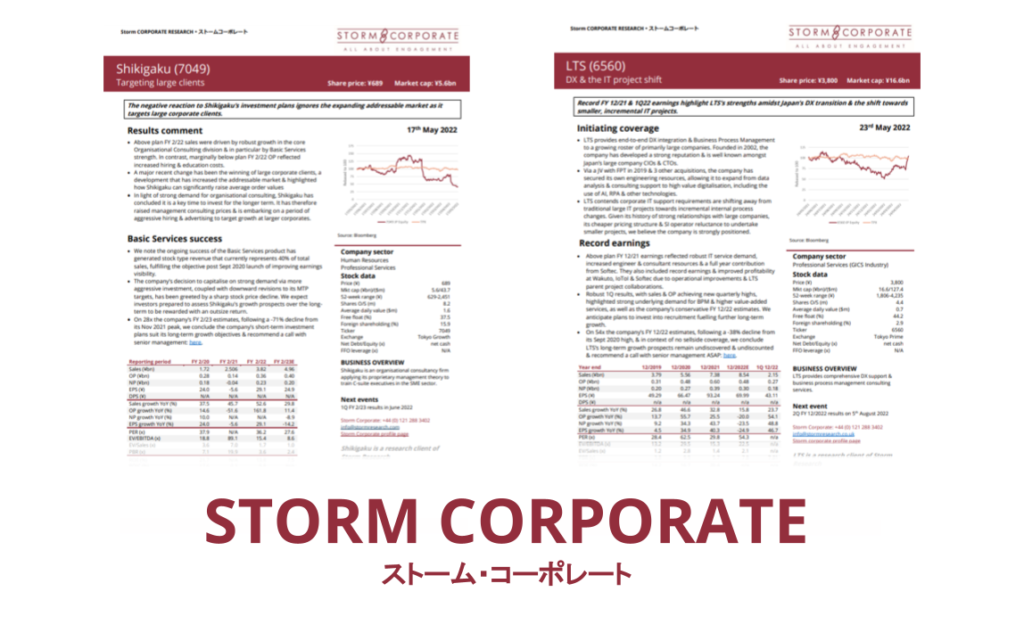

HERE ARE THE SAMPLE ARTICLES THAT I HAVE CREATED

Generating long articles with citations is hard to do and hard to evaluate. We break it down into two stages:

Pre-writing stage

Given a topic, the system collects references from a large corpus (e.g., the Internet) and generates an outline.

Writing stage

Given the topic and the collected references, the system generates a full-length article with citations. We define metrics and collects the Fresh Wiki dataset to evaluate the pre-writing stage quality. Our results demonstrate a correlation between the pre-writing quality and the final article quality.

Finding Highlights

Automatic Evaluation: STORM outperforms strong retrieval-augmented generation baselines across all automatic metrics, including LM eval and metrics comparing with human-written articles.

Fast prototyping

Evaluating outline quality in the per-writing stage is an effective way to prototype the report generation system.

Expert Evaluation

In our human evaluation with experienced Wikipedia editors, all participants agreed that the system is helpful for their pre-writing stage.

Error Analysis

Through careful error analysis (see Appendix C & E), we find the major challenge stems from red herrings (establishing shaky links or incorporating irrelevant content) rather than widely discussed factual hallucination issue.

© 2024 Stanford STORM Project

Direct prompting leads to superficial questions, especially for topics in the long tail. To improve the breadth and depth of LLM-generated questions, we propose two approaches:

Perspective-Guided Question Asking: Besides instructing the LLM to generate questions on a given topic, we include a specific perspective in the prompt to provide focus and prior knowledge. While users can specify perspectives, STORM can automatically mine diverse perspectives from relevant Wikipedia articles.

Simulating Conversations: Ram, 1991 argues that new questions often arise as answers to previous questions update a person’s understanding of a topic in the human learning process. We encourage the LLM to ask follow-up questions by simulating a conversation between it and a retrieval-augmented question-answering component.

Our ablation study demonstrates the effectiveness of the proposed method.

HERE ARE THE SAMPLE ARTICLES THAT I HAVE CREATED

– https://storm.genie.stanford.edu/article/cardiologist.-83375

– https://storm.genie.stanford.edu/article/creativity–83392

This tool is very amazing and useful for us. After using this tool we learn a lot of new things and also your communication and writing skills are developed and your future becomes more bright.

This tool is very amazing and useful for us. After using this tool we learn a lot of new things and also your communication and writing skills are developed and your future becomes more bright.